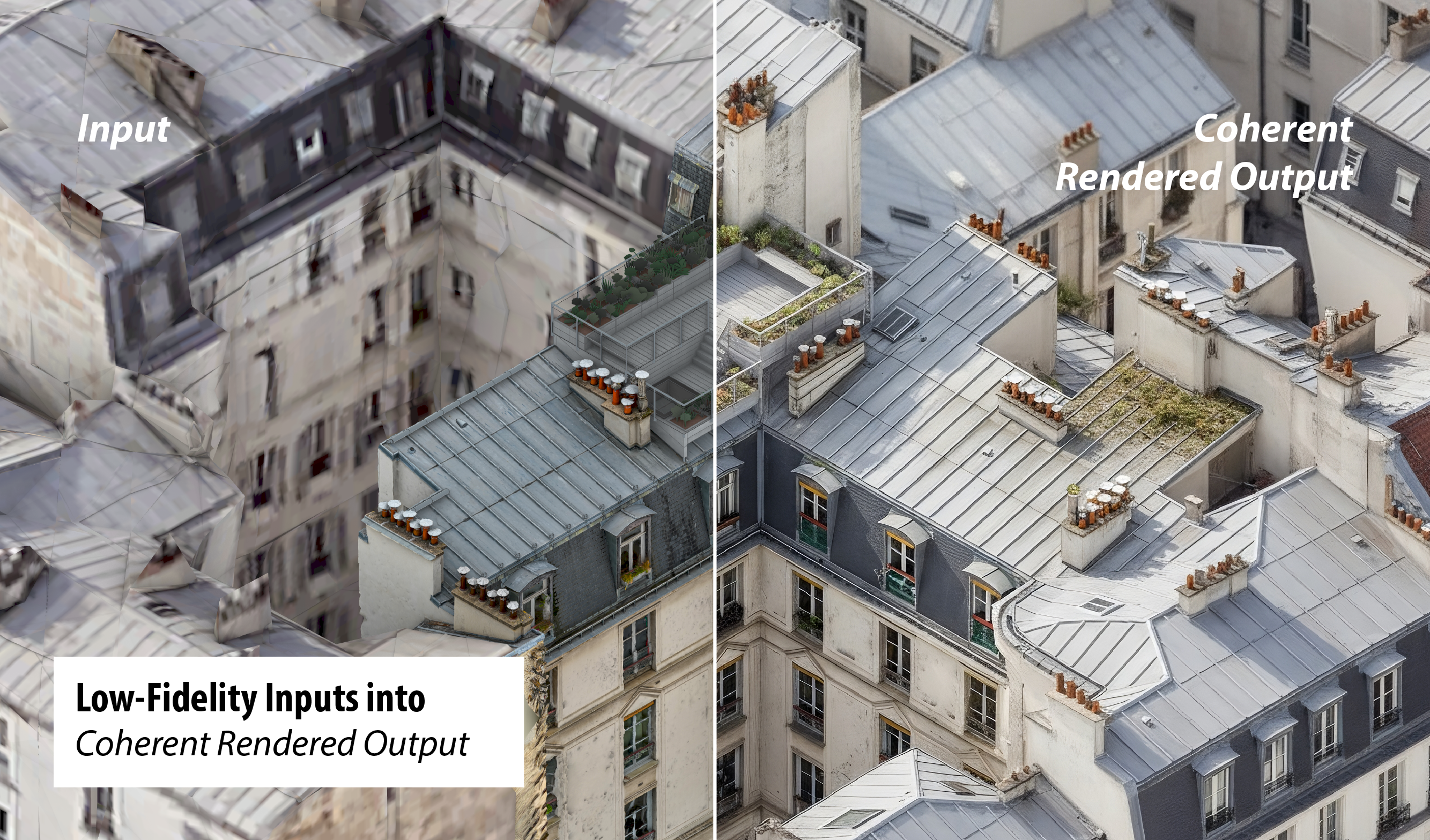

Low-Fidelity Inputs into

Architectural Renderings

Zurich, Switzerland / 2025

For Roofscapes

How can a low-fidelity collage of screenshots, 3D scans, and Rhino view captures be transformed into a visually compelling architectural rendering?

That question — originally raised by Roofscapes — sparked this experiment. AI should be able to help us bridge that gap between raw input and refined visualization.

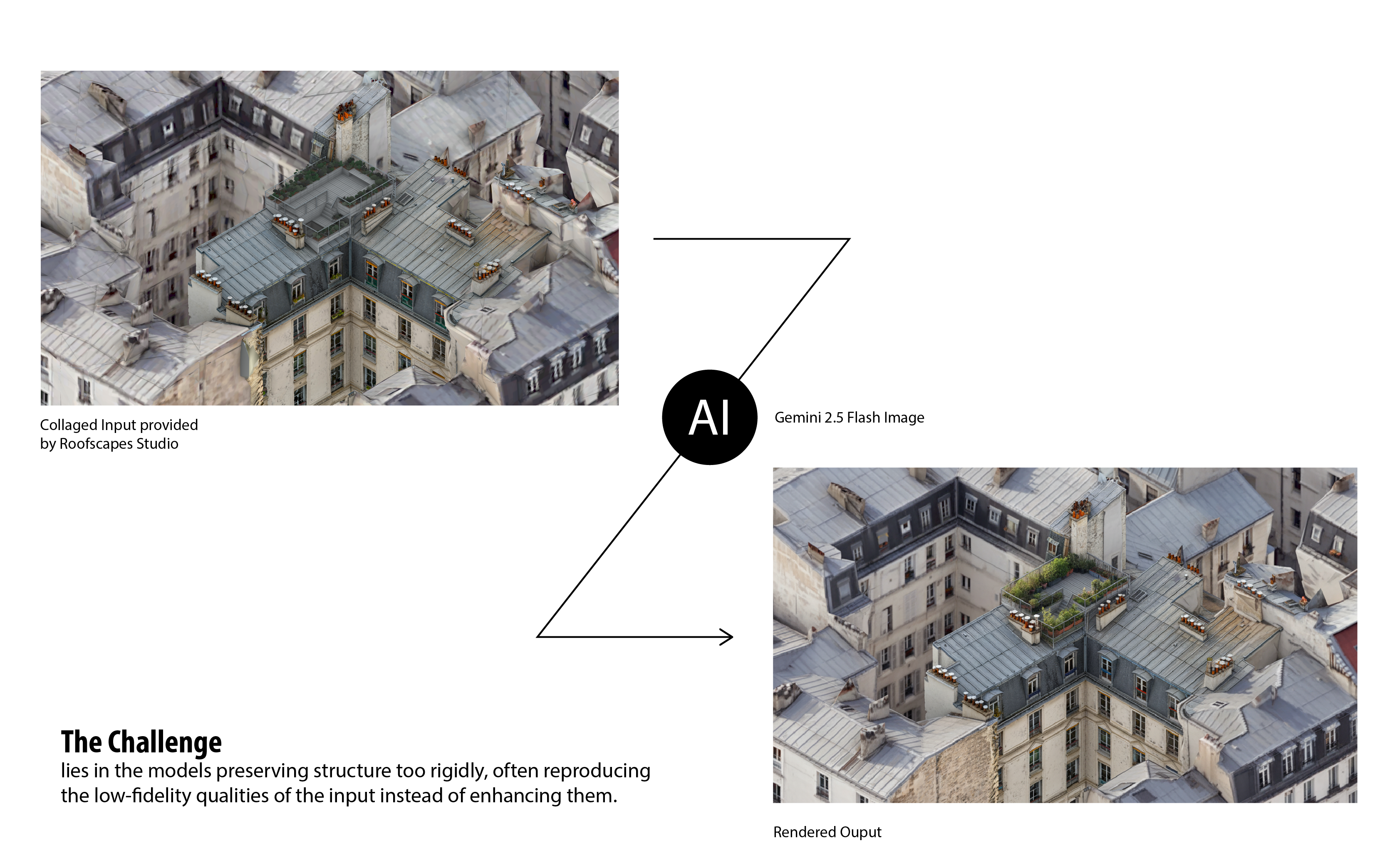

Yet, even the most advanced models face a paradox: they’re incredibly good at preserving structure and geometry, but sometimes too good — faithfully reproducing the low fidelity of the input instead of elevating it. Finding that balance between precision and transformation remains the real challenge.

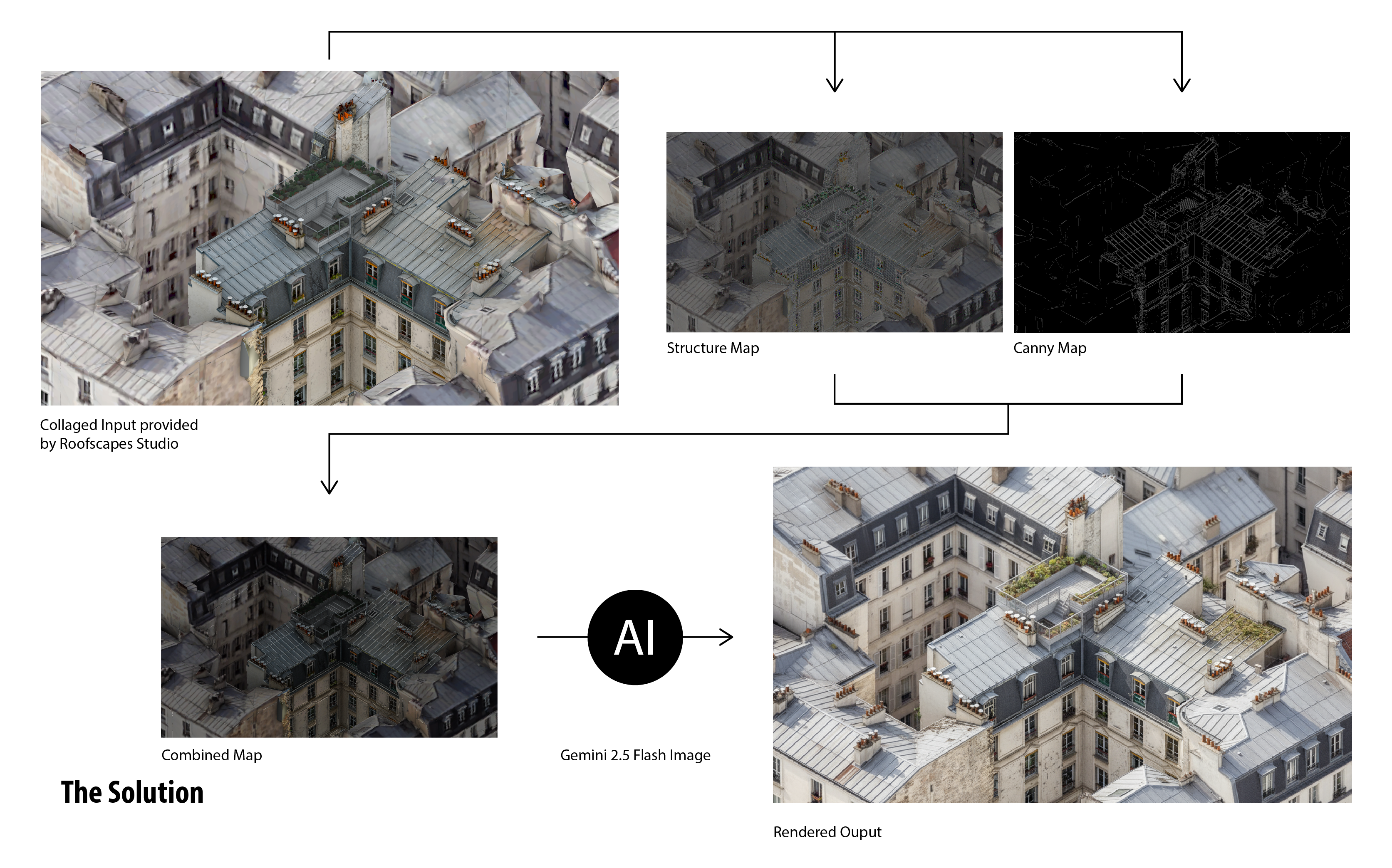

For this project, I set out to design a workflow that feels light, fast, and easy to set up. Normally, I’d reach for Flux or Stable Diffusion paired with custom-trained LoRAs, but this time I went another route — tapping into the new Google Gemini 2.5 Flash API.

The final setup uses a compact for-loop that distills both a Canny edge map and a structural pass, merging them into a single input for Gemini. The result: a surprisingly coherent, stylized image where the original geometry and its surrounding context align seamlessly — turning fragmented data into something that actually feels designed.

Download the workflow here